As of August, 2015

| Faculty/Department | Department of Information System Fundamentals Graduate School of Information Systems |

|

| Members | Hiroki HONDA, Professor | |

| Affiliations | Institute of Electrical and Electronics Engineers (IEEE), Association for Computing Machinery (ACM), Information Processing Society of Japan, Institute of Electronics, Information and Communication Engineers | |

| Website | http://www.hpc.is.uec.ac.jp/honda/ | |

High-performance computing, GPU computing, GPGPU, grid computing, parallel processing compiler, end-user assisting technology

Based on the goal of enhancing processing speeds, an approach involving parallel processing on systems comprised of multiple processors is entering widespread use.

Taking advantage of the growing throughput of computer networks, efforts are also focusing on parallel processing configurations that treat groups of computers on a network as a single multiprocessor system.

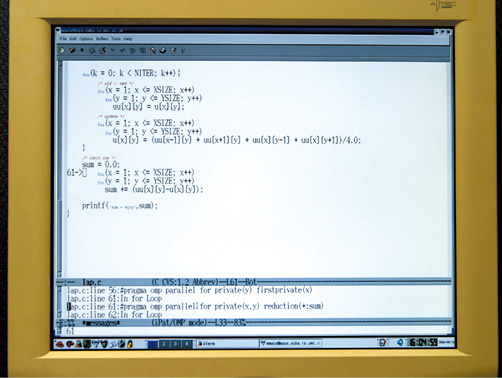

To maximize the transparency and convenience of high-performance parallel computing, the process must minimize the tasks performed that the users themselves must perform. This can be done by developing technologies that automate various aspects of the parallel processing environment.

In light of these developments, our laboratory is working to develop parallel processing techniques and end-user assisting technologies to maximize the benefits and performance of high-performance computing systems.

Constraints imposed by power consumption issues have resulted in a leveling off of the performance of a single CPU, prompting a shift in research toward GPUs (Graphics Processing Units) and multicore processors that offer higher performance per unit of power consumed.

However, the processor- and programming method-specific fine tuning required for typical parallel processing configurations pose various difficulties.

To overcome these difficulties, our laboratory is developing a programming framework that will facilitate the tuning process by deploying processors collectively in a more uniform way.

Another research area in which we are devoting considerable effort involves technologies that support end users. The goal of these technologies is to take full advantage of the performance made possible by high-performance computing systems.

In general, the latent performance offered by high-performance computing systems cannot be fully realized at this time unless the users have advanced skills and are capable of performing complicated tuning procedures. This impedes the widespread introduction of these computing systems and calls for technologies that can assist and guide end users.

We are also developing grid middleware technologies. The component technologies required to configure a grid include cooperative problem-solving technologies, resource sharing technologies for geographically dispersed environments, and construction and management technologies for virtual composite organizations.

We already offer a library of previously developed automated remote installation and dynamic switching technologies of servers. These should prove essential in reducing time-consuming tasks on the user side.

We are also investigating low power processor architectures, as well as macro data flow processing to overcome the limitations of conventional parallel processing.

Within the scope of the JST Strategic Basic Research Programs (CREST Project; 2007-2012) in which we participate, we have completed and presented a proposal to develop a ULP-HPC (Ultra Low Power HPC) capable of achieving a 1000-fold enhancement in performance per unit of power consumption by 2017.

As part of this project, we are developing a unifying programming framework that will make it possible to tune power consumption and performance parameters for GPUs, ClearSpeed FPGA accelerators, and cell processors.

The core theme of the research at our laboratory is high-performance computing. Specific research topics span the spectrum, ranging from processor architecture to system architecture and from system software to applications. This range of interests makes it possible for us to pursue R&D with a bird’s-eye view of the broad landscape, unconfined to technologies that are ultimately components of the whole.

To build a high-performance computing substrate, one must exploit the strengths of parallelism intrinsic in applications while achieving a balance with power consumption, and then implement parallel processing on tiers ranging from chip to geographically dispersed environments (e.g., the grid). One of our future goals involves building a high-performance computing system that organically integrates the parallel processing methods developed in each of our studies at an application level.